Apache Kafka is a widely used and popular open-source Event-Streaming & messaging system with capabilities to handle huge loads of data with its distributed, fault tolerant architecture. In this Kafka beginners tutorial, we will explain basic concepts of Kafka, how Kafka works, Kafka architecture and Kafka use-cases.

Kafka Introduction: An Overview of Kafka

Kafka is a messaging and event streaming platform which is available open-source and is used by organizations to enable high velocity and high loads of events data transfer between different systems & applications in real-time.

Kafka was originated in LinkedIn and for their own use-cases but later it was made public in 2011 as an open-source platform under apache license and after that It has captured a great attention of people around the world for small, medium as well as large scale integration projects due to its distributed, fault tolerant, scalable and highly efficient event streaming capabilities.

Kafka Works with Pub-Sub mechanism for message communication where message producers publish data to Kafka cluster and interested consumers subscribe to the data of their interest.

If you prefer to go through Kafka Beginners tutorial in video format, watch below video on TutorialsPedia YouTube channel where Kafka basics, Kafka Architecture, Kafka Key Concepts and Kafka Use-cases have been explained in details:

If you prefer to go through Kafka tutorial in text format, continue reading below.

Why Kafka Is Important and What are Benefits of Using Kafka?

Despite the fact that there are many key players in the market in the area of event processing and pub-sub messaging, Kafka places itself prominent and is widely used for a number of reasons and benefits that are associated with Kafka as explained below:

- Kafka is highly efficient to handle high velocity and high loads of data due to its smart architectural design. It can handle millions of events in real-time with low latency and higher throughput.

- Kafka is highly scalable due to its distributed and clustered architecture. To meet any growing business needs, clusters can be horizontally expanded and scaled by adding more brokers to the cluster. Topics inside brokers can also be fine-tuned and scaled with a smart partitioning and replication.

- Kafka is considered to be highly durable due to its default persistence feature where messages are persisted to avoid any data loss. This makes Kafka a great choice for data-critical and mission-critical integrations in real-time.

- Kafka architecture supports fault tolerance making message communication and event processing robust and reliable without data losses.

- In terms of cost, Kafka becomes a solid choice as it is open-source and doesn’t involve heavy licencing costs contrary to other proprietary options.

- Kafka with its rich features and optimal performance, fits for a number of different use-cases in any organization as a messaging platform, as an event streaming platform for activities tracking, application monitoring, real-time events aggregation etc.

Kafka Architecture: Explanation of Kafka Architecture and Its Basic Concepts

Kafka Messaging Platform comprises of multiple components and actors for messages publishing, messages handling, messages replication, messages consumption and platform management and governance.

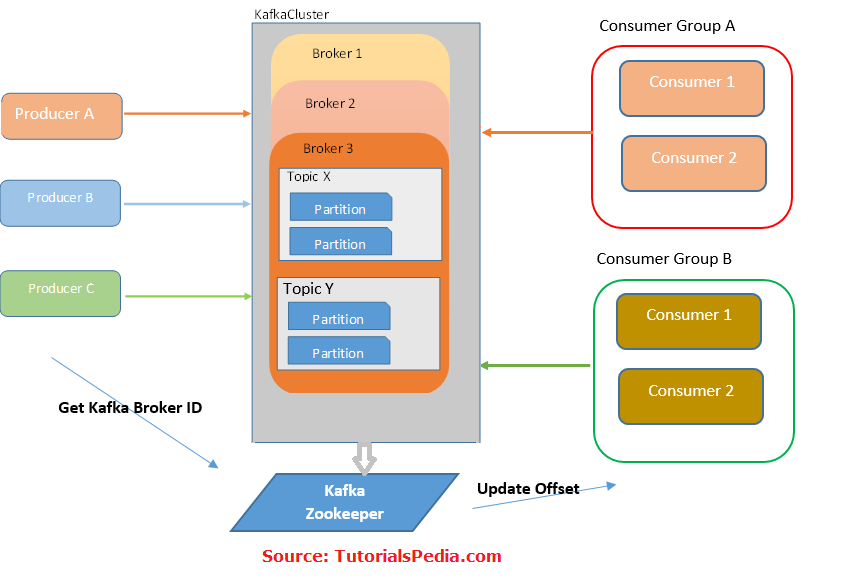

Below diagram provides a picture of high level Kafka architecture

Based on above architecture diagram of Kafka, Let’s explain core concepts in detail.

Kafka Concepts Explained: Kafka Producer

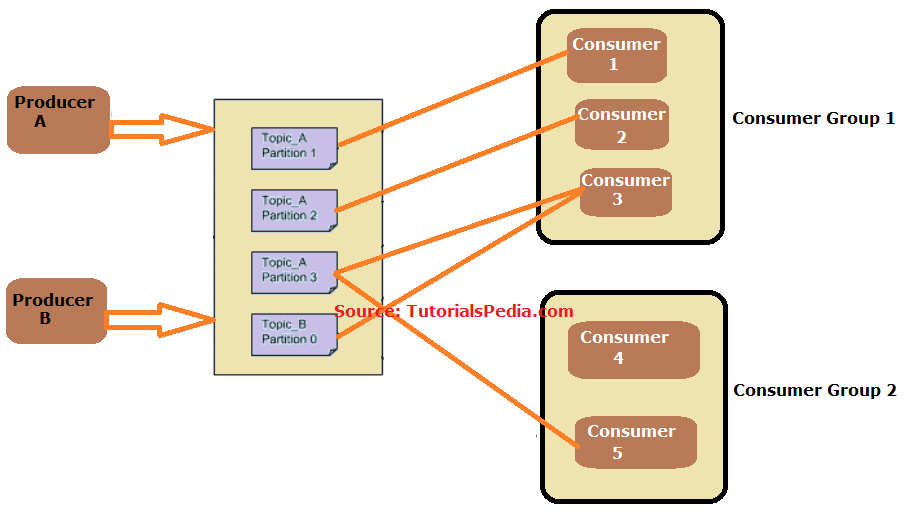

Producer is the source which Publishes events data to Kafka topic. A Kafka Producer can publish messages to one or more topics as per business requirements in any particular scenario.

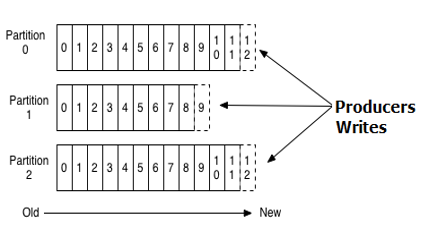

Kafka message produced by producer contains information about the topic and its associated partition to which message should be written. Each Kafka message produced is written to the partition in any topic to the next available offset which ensures an ordered sequencing of the messages delivery in Kafka messaging system. In this manner, olders messages are available in a partition with smaller offset while new messages appear with higher offset value.

As an example, in below picture, we can see that new messages in each partition are being written on top of the partition:

Kafka producer doesn’t wait for acknowledgements from the broker to ensure faster delivery.

Kafka Concepts Explained: Kafka Consumer

Consumers/Subscribers read messages from Kafka Topics based on their subscription. Multiple Consumers can be groups as a “Consumer Group”. A message from Topic is consumed by only one consumer from a particular group. Consumers belonging to one group share a Group ID. Consumers in a group can divide partitions among themselves for efficient consumption of messages.

In the below picture, we have scenario where we have a total of 5 consumers grouped as two consumer groups. In this scenario, a message from a particular topic partition can be consumed by only one consumer in a group but a consumer from another group is capable to consume messages from same topic partition.

Kafka Concepts Explained: Kafka Topic

Topic is a stream of messages belonging to a specific category. Topics are divided into partitions. Each topic has minimum one partition. Messages in partitions are stored as immutable and are in ordered sequence with an offset.

In Kafka, replication can be done at partition level. Each partition can have one or more replicas meaning that partitions contain messages that are replicated over a few Kafka brokers in the cluster. Replication works on Leader-follower principle with one replica as leader and others are followers. All read-write operations are governed by the leader and followers replicate leader.

Kafka Concepts Explained: Kafka Broker & Cluster

A Broker is a central server in a Kafka environment responsible for providing services to producers & consumers for storing and retrieving data. In a stand-alone approach, Kafka platform will contain one broker at minimum.

A collection of brokers forms a Kafka Cluster to achieve distributed and fault tolerant event processing. Kafka Brokers cluster is managed by Zookeeper to support a collective function of all brokers in a cluster in a coordinated manner.

Kafka Concepts Explained: Kafka Zookeeper

Management and coordination of Kafka brokers in a cluster is achieved through ZooKeeper. ZooKeeper notifies all nodes when the topology of the Kafka cluster changes. E.g. when brokers and topics are added or removed or when a broker goes down or comes back to running state.

ZooKeeper also controls Leader/Follower selection among multiple brokers. When a leader fails, another broker from followers takes charge as leader.

Kafka Interview Questions Answers

In another article, I have discussed Kafka Interview Questions Answers for Developers in detail. That article should greatly help you to get a feel of the possible questions being asked for Kafka Job Interviews.

Kafka Use Cases

Kafka with its publish-subscribe based messaging features and its event streaming capabilities can be used for a variety of use-cases. Here is a list of a few example use-cases for Kafka:

- Kafka can be used for Stream Processing to stream, aggregate, enrich and transform data from multiple sources. E.g. real-time data from some sensors or vehicles can be streamed, gathered, enriched, aggregated and made available to some downstream systems & applications (consumers) to take necessary actions based on events data.

- Kafka can be used for Website Activity Tracking. E.g. in an e-commerce website, certain events can be generated and published based on user actions and such event’s data can be utilized on consumer applications side for analytics, business intelligence, marketing and user-experience improvement actions.

- Kafka can be used for Applications health monitoring in any organization containing large network of systems & applications. Events can be generated from applications based on usage patterns, resources utilization, abnormal activities etc. and necessary pre-emptive and corrective actions can be taken based on events data.

- Kafka can be used as a Messaging Platform for real-time messaging as replacement of traditional messaging systems.

To learn how to isntall Kafka on Windows OS, refer to my other tutorial where I explained in detail how to install Kafka on Windows as Windows Service.

Feel free to comment below if you have any queries or any further thoughts on this topic.

Pingback: How to Setup Kafka on Windows: Install and Run Kafka As Windows Service | TutorialsPedia

Pingback: MuleSoft Kafka Integration: Send & Receive Kafka Messages Using Mule 4 | TutorialsPedia

Pingback: Kafka Interview Questions Answers for Developers